Guest post by Kim Melton

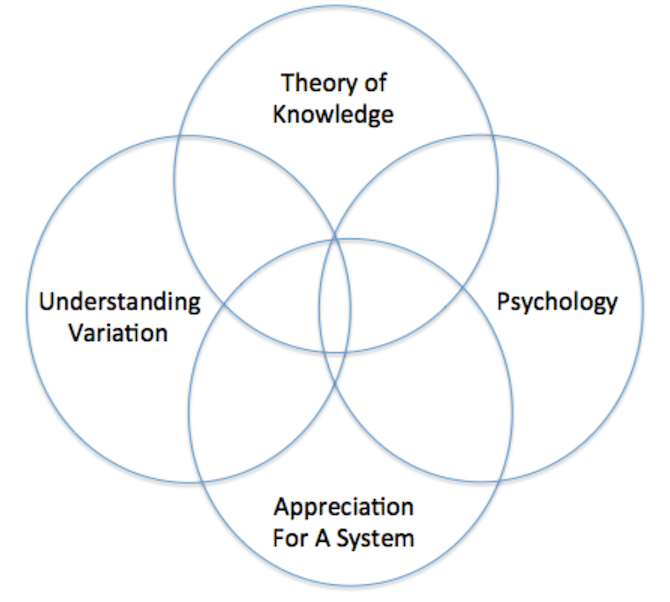

To put it simply, effective use of Big Data to make decisions depends on each of the four components of The System of Profound Knowledge and the interaction between the components. Big Data has always been around if we think of this as datasets that are larger than our current ability to store and analyze.

Each time technology advances to allow for collection, storage, and analysis of more data, we have the opportunity to expand the boundaries of the systems that we can analyze and improve. We have the ability to incorporate data from more sources (both internal and external to our own organization and to supplement “traditional data” with unstructured data). We have the opportunity to learn through the testing of new and expanded theories. At the same time, we need to be vigilant about understanding the data we are using. Are operational definitions the same across different data sources? Have we considered the impact of collecting data (e.g., personal privacy issues, perceived purpose for collection and analysis of data, etc.)? Do we know why, when and how data were collected?

Big Data (and analytics) has the potential to help individuals and organizations make better and more informed decisions…but more data does not automatically produce better results.

What is the aim? In the language of analytics, are data being collected and analyzed to Describe, Predict, or Prescribe? In Deming’s language, are we talking about an enumerative or analytic problem? Will our results be used to describe the current situation or to attempt to understand the cause system in order to understand and/or change future output from the process? Attempting to understand the cause system and to make recommendation for action that impacts the cause system requires a combination of technical skills and subject matter knowledge—and this usually involves cooperation among individuals with different perspectives.

What is our theory? Theory poses questions that guide analysis. As Dr. Deming would say, “Without a theory there is no learning.” Big Data allows us to investigate new theories, and techniques for visualizing data provides opportunities for recognizing patterns and generating additional questions (and theories). But, more data, by itself will not generate answers to unasked questions. In fact, as the availability of data increases, there is increased need for cooperation between individuals with different viewpoints.

With the availability of more and more data, how do we reconcile this with the recognition that there are aspects of working with people and organizations that need to be managed but that still cannot be measured? And, if too much focus is placed on “getting the right numbers,” people will “game” the systems to make the data look good.

How do we recognize when we start to move from “data informed” decision making to “data driven” decision making? When we cross the line from data informed to data driven decision making, we increase the likelihood that people (and organizations) will focus on the measurements “by whatever means.” When this happens, we can’t trust the measurements; cooperation and trust between individuals and departments decreases; definitions change; and opposition to change increases. With more data from more different sources, the opportunity for an over-focus on the numbers can be a slippery slope!

The System of Profound Knowledge … helps us build knowledge. Without knowledge, “Off we go to the Milky Way.”

Kim Melton is a Professor of Management at the University of North Georgia where she teaches Statistic for Business. In April 2015, she and Suzanne Anthony published “When Measurement Becomes the Mission, Don’t Trust the Measurement” in The Journal for Quality & Participation. She is a member of the American Statistical Association, Decision Sciences Institute, and a Senior Member of the American Society for Quality.

Related: Statistical Thinking Activities: Some Simple Exercises With Powerful Lessons